New Data API for Google Analytics 4

Published:

Mar 30th

2021

Updated:

Jun

14th

2021

| 20 min read

The new version of Google Analytics with an innovative approach to data structure has been introduced in summer 2019, under the name Google Analytics App + Web. In autumn 2020 Google released it from the beta version and rebranded it as Google Analytics 4. While it is still not recommended to transfer your whole data measurement to the new version as it is being gradually improved, it definitely deserves increased attention as Google is introducing many new features there. The new Google Analytics 4 is based on events and their parameters. As its previous name implies, it combines data from apps and web analytics in one property. Moreover, it serves as a flexible tool for cross-platform analysis. To learn more about Google Analytics 4, follow our blog for an upcoming article in which we will discuss changes and new features of the GA4, extensively.

In this article, we speak about approaches to query data from Google Analytics 4 using the Google Analytics Data API (further abbreviated as GA4 API). The GA4 API is still available only in the beta version but it is never too early to study its features and learn how to benefit from them. We present the API mainly using examples of the JSON-structured request body that can be used in a cURL, HTTP request, or in JavaScript. At the end of the article, you will find a sample code to run a query in Python using the library google-analytics-data prepared directly from Google.

Remember that this article is only about the Data API, which means the API for querying the measured information about your sites. Google is also introducing a new Admin API for displaying and changing the settings of Google Analytics. We will investigate that in a future article.

One simple query - querying data using runReport method

The easiest way to extract data is using the runReport method which allows running a single request to data in order to receive a single set of results. To obtain data a POST request is sent to URL https://analyticsdata.googleapis.com/v1beta/<GA4_PROPERTY_ID>:runReport where you need to replace the placeholder <GA4_PROPERTY_ID> with your GA 4 property ID. The basic parameters of a single query have a very similar structure to queries constructed for the Universal Analytics API (further denoted as original API). Therefore, if you are now using the original API with the basic functionality of the API queries, there will be only minor adjustments needed. Bigger differences to the original API lie in its further options and extensions, for example, quotas report or a special request for pivot data. These will be presented in further sections of the article.

Differences between data queries

To illustrate the similarities and differences we show an example of a simple query of sessions over 3 days. This query gives you information about how your sessions have evolved in the last three days. Below we include the request body for both the GA4 API and the original API. As you can see below, there are some differences between the queries.

- The easiest noticeable difference is that there is no parameter with view ID or property ID in the query. This information is passed directly into the request url (as mentioned above).

- The GA4 API introduced consistency between specifying dimensions’ and metrics’ names because names of both metrics and dimensions are specified in parameter

name, notexpressionfor metrics andnamefor dimensions as before. - Lastly, the

orderByshas a slightly different structure, where you differentiate if you order by a dimension, a metric, or a pivot group and you specify the direction of ordering for all listed columns together.

The query for GA4 example

{

"dateRanges": [

{

"startDate": "2021-01-04",

"endDate": "2021-01-06"

}

],

"metrics": [

{

"name": "sessions"

}

],

"dimensions": [

{

"name": "date"

}

],

"orderBys": [

{

"metric": {

"metricName": "sessions"

},

"desc": true

}

]

}

The query for UA for comparison

{

"viewId": "XXXXXXXXX",

"dateRanges": [

{

"startDate": "2021-01-04",

"endDate": "2021-01-06"

}

],

"metrics": [

{

"expression": "ga:sessions",

"alias": ""

}

],

"dimensions": [

{

"name": "ga:date"

}

],

"orderBys": [

{

"sortOrder": "DESCENDING",

"fieldName": "ga:users"

}

]

}

Differences between results

The results have a comparable structure as well, where the tendency of the GA4 API seems to make the results more consistent and reduce additional information unless directly queried. Below we present the results for the data queries, specified above, and list the differences between them.

- The values for dimensions and metrics have the same structure in GA4 API in contrast to the original API, where dimension values are presented in an array while metrics are in an object with an array of values. This simplifies the extraction of the data.

- The summary values are not included automatically in the GA4 API. To receive any aggregated metrics, you need to specify which aggregation you need in a new parameter

metricAggregationswith optionsTOTAL,MINIMUM,MAXIMUM, andCOUNT. Therefore, you can receive the same information as through the original API but you won’t get it automatically. - The column header is separated for metrics and dimensions but in contrast to the original API it has the same structure for both metrics and dimensions. This corresponds to the introduced consistency in presenting values and in querying the names of metrics and dimensions.

The results from GA4 example:

{

"metricHeaders": [

{

"name": "sessions",

"type": "TYPE_INTEGER"

}

],

"rows": [

{

"dimensionValues": [

{

"value": "20210104"

}

],

"metricValues": [

{

"value": "12900"

}

]

},

{

"dimensionValues": [

{

"value": "20210105"

}

],

"metricValues": [

{

"value": "10700"

}

]

},

{

"dimensionValues": [

{

"value": "20210106"

}

],

"metricValues": [

{

"value": "11300"

}

]

}

],

"metadata": {},

"dimensionHeaders": [

{

"name": "date"

}

],

"rowCount": 3

}

The results from UA for comparison:

{

"columnHeader": {

"dimensions": ["ga:date"],

"metricHeader": {

"metricHeaderEntries": [{

"name": "ga:sessions",

"type": "INTEGER"

}

]

}

},

"data": {

"rows": [{

"dimensions": ["20210104"],

"metrics": [{

"values": ["12900"]

}

]

}, {

"dimensions": ["20210105"],

"metrics": [{

"values": ["10700"]

}

]

}, {

"dimensions": ["20210106"],

"metrics": [{

"values": ["11300"]

}

]

}

],

"totals": [{

"values": ["34900"]

}

],

"rowCount": 3,

"minimums": [{

"values": ["10700"]

}

],

"maximums": [{

"values": ["12900"]

}

]

}

}

Overall, as you can see in the included example the structure of both the query and the resulting response is very similar with some steps towards consistency between dimensions’ and metrics’ specification and presentation. Therefore, there does not seem to be an obstacle from moving from simple queries in the original API to the simple queries in the GA4 API. So, let us investigate what the GA4 API brings additionally.

Further parameters in a single query

Quotas report

One of the new features offered by the GA4 API is the option to view the quotas for a given property. This information serves as a warning if you are nearing any of the thresholds or for informative purposes about the number of tokens consumed by a given request because requests in GA4 API cost a different amount of tokens based on their complexity. If you run too many complex requests, you will reach the limit of your available quotas and may need to wait for the next hour or the next day, depending on the type of quota you have reached.

The quotas information can be received by setting the optional parameter returnPropertyQuotas to true. This results in an additional object in the results added on the same level as rows or headers. The results contain 5 quotas information. First, they inform you about the number of tokens consumed by the current query and remaining tokens per day and per hour. According to the quotas report in the included example, standard property has in total 25,000 tokens per day and 5,000 tokens per hour available for the GA4 API. The current request that queried this information along with other data consumed 5 tokens from the limits. Additionally, it provides information about the remaining permitted concurrent requests and permitted server errors for the given project. There can be only 10 requests running in parallel and there can be no more than 10 server errors (responses with code 500 or 503) in an hour for the whole project, otherwise, Google allows no further queries to be processed until the hour passes. Lastly, up to 120 requests with potentially thresholded dimensions per hour are allowed. Aomng the potentially thresholded dimensions belongs userAgeBracket, userGender, brandingInterest, audienceId, and audienceName. This limit is imposed to prevent inference of user demographics or interests.

"propertyQuota": {

"tokensPerDay": {

"consumed": 5,

"remaining": 24986

},

"tokensPerHour": {

"consumed": 5,

"remaining": 4990

},

"concurrentRequests": {

"remaining": 10

},

"serverErrorsPerProjectPerHour": {

"remaining": 10

},

"potentiallyThresholdedRequestsPerHour": {

"remaining": 120

}

}

Notes on filters

Next, we include a comment on the filters in the GA4 API. The filters for metrics and dimensions are separated in the same way as for the original API. There is no filtersExpression parameter that would allow simple filters to be written in one line.

However, the dimension and metric filters are now much more flexible regarding combinations of multiple filters together. In the original API, you could combine multiple filters on only one level, specifying that either all filters should hold (using the and operator) or that it is sufficient for only one of the filters to be satisfied (using the or operator). In the GA4 API, there is an option to chain several of these operators using a set of the following parameters successively: andGroup, orGroup and notExpression. For example, if you need to analyze multiple groups of visitors or events where at least one group is specified by more than one condition (e.g. people who visited a certain page using a mobile phone or when an error occurred for people coming from a particular source), you can easily do it in the GA4 API while you would need separate requests using the original API.

Below you can find an example of the dimension filter with multiple chained conditions. This query can be used to checking multiple reported device-specific events at once. The conditions can be simplified into:

EITHER

(deviceCategory == "Mobile" AND pagePath == "/path-to-page")

OR

(deviceCategory == "Tablet" AND pagePath == "/path-to-another-page")

{

"dimensionFilter": {

"orGroup": {

"expressions": [

{

"andGroup": {

"expressions": [

{

"filter": {

"stringFilter": {

"value": "Mobile"

},

"fieldName": "deviceCategory"

}

},

{

"filter": {

"stringFilter": {

"value": "/path-to-page"

},

"fieldName": "pagePath"

}

}

]

}

},

{

"andGroup": {

"expressions": [

{

"filter": {

"stringFilter": {

"value": "Tablet"

},

"fieldName": "deviceCategory"

}

},

{

"filter": {

"stringFilter": {

"value": "/path-to-different-page"

},

"fieldName": "pagePath"

}

}

]

}

}

]

}

}

}

For the individual dimension or metric filter there are several options for filter types: nullFilter, stringFilter, inListFilter, numericFilter and betweenFilter. While these are not entirely innovative, they present a more systematic way of selecting the type by separating inListFilter and betweenFilter from the classical numeric and string filters. The nullFilter offers an option to check for null values in a dimension. It can be accompanied by notExpression to exclude all rows with null values in a certain dimension.

Cohorts

Cohorts were possible to analyze already in the original API. To learn more about that, check our article on extended options in the original API version v4. The options to run cohort report requests haven’t changed so much yet. It is still only possible to define a cohort by the time of their first visit, by setting the cohort dimension to firstTouchDate. You further specify a name to denote the cohort group and the date range for the firstTouchDate that defines the cohort.

An improvement over the previous state is the flexibility to define the period for which the cohort should be observed. Unlike before, you specify the granularity of the report, and by setting the start and end offset and choose for how many of these granularity time periods the report should extend. The original API selects the observation period for you automatically.

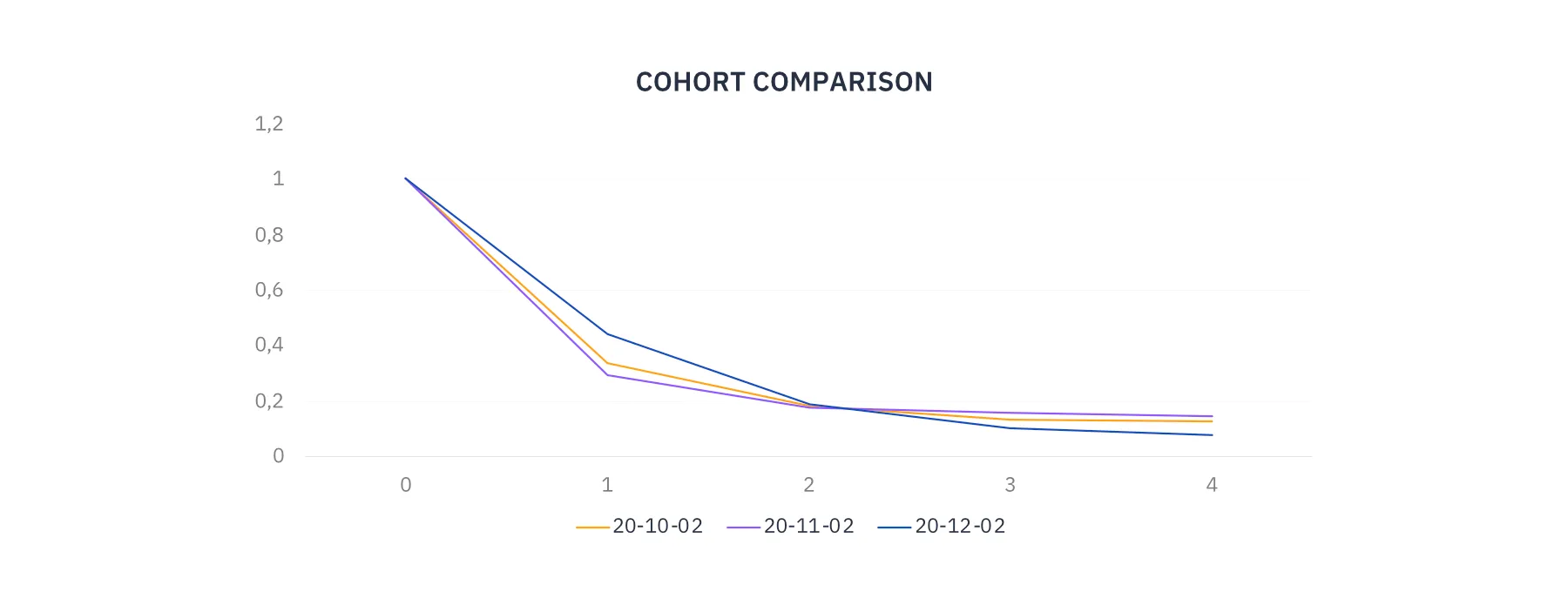

We show an example of how you can compare weekly cohorts that come first in various weeks before Christmas and determine whether the people that come closer before Christmas stay active in a similar proportion to people who come long before Christmas. The query asks for the proportion of active users several weeks after their first visit. The results contain data for users with first visits in one of the three selected weeks (second week of October, the second week of November, and second week of December). You can see how many of them returned one to four weeks after their first visit where the weeks are depicted on the horizontal axis and the returning share on the vertical axis. As you can see from the graph, there is a difference between people from the selected weeks. In this case, people with first visits closer to Christmas came in a higher share in the next week, however, in the longer term, they have a lower retention rate.

{

"dimensions": [

{

"name": "cohort"

},

{

"name": "cohortNthWeek"

}

],

"metrics": [

{

"name": "cohortRetentionFraction",

"expression": "cohortActiveUsers/cohortTotalUsers"

}

],

"cohortSpec": {

"cohorts": [

{

"name": "20-10-02",

"dimension": "firstTouchDate",

"dateRange": {

"startDate": "2020-10-05",

"endDate": "2020-10-11"

}

},

{

"name": "20-11-02",

"dimension": "firstTouchDate",

"dateRange": {

"startDate": "2020-11-09",

"endDate": "2020-11-15"

}

},

{

"name": "20-12-02",

"dimension": "firstTouchDate",

"dateRange": {

"startDate": "2020-12-08",

"endDate": "2020-12-14"

}

}

],

"cohortsRange": {

"granularity": "WEEKLY",

"startOffset": 0,

"endOffset": 4

}

}

}

For more examples, Google provides sample queries with explanations and visual representation.

Pagination

By default, the query returns (up to) 10 rows of the results. This can be increased using the limit parameter of up to 10,000 rows of returned results. If you need to query more rows, you can combine the limit parameter with the parameter offset which specifies from which index the results should be displayed. Therefore, if the rowCount in the results show that you have over 10,000 resulting rows, you need to run the query first with the following parameters to query the first 10,000 rows:

{… "limit": 10000, "offset": 0}

and next, with offset set to 10,000 to query rows starting with the row index 10,001:

{… "limit": 10000, "offset": 10000}

Multiple queries

To run multiple requests together, you need to use the batchRunReports method instead of the runReport method. This works in a similar way as constructing multiple requests for the original API, which means that the requests are all included in an array within an outer object. Inside they have the same structure as if they were single requests.

As an example, we present a set of queries where the first query extracts the number of sessions within several days and the other on total sessions in the previous month. These queries can be used to compare the current sessions with the daily average in the last month. This example is selected for its simplicity; however, the concept can be used in a broad variety of cases, including comparison of data query to its filtered counterpart or comparison of multiple time periods that cannot be represented by a single dimension.

{

"requests": [

{

"dateRanges": [

{

"startDate": "2021-01-04",

"endDate": "2021-01-06"

}

],

"metrics": [

{

"name": "sessions"

}

],

"dimensions": [

{

"name": "date"

}

],

"orderBys": [

{

"metric": {

"metricName": "sessions"

},

"desc": true

}

]

},

{

"dateRanges": [

{

"startDate": "2020-12-01",

"endDate": "2020-12-31"

}

],

"metrics": [

{

"name": "sessions"

}

]

}

]

}

Pivot queries

Similar to cohorts’ analysis, the possibility to run pivot queries was already introduced with version 4 of the original API. More about the use of pivots in the original API can be found in our already mentioned article on the original API version v4. In GA4 API, pivots can be obtained using either runPivotReport (for a single request) or batchRunPivotReports (for multiple requests) methods.

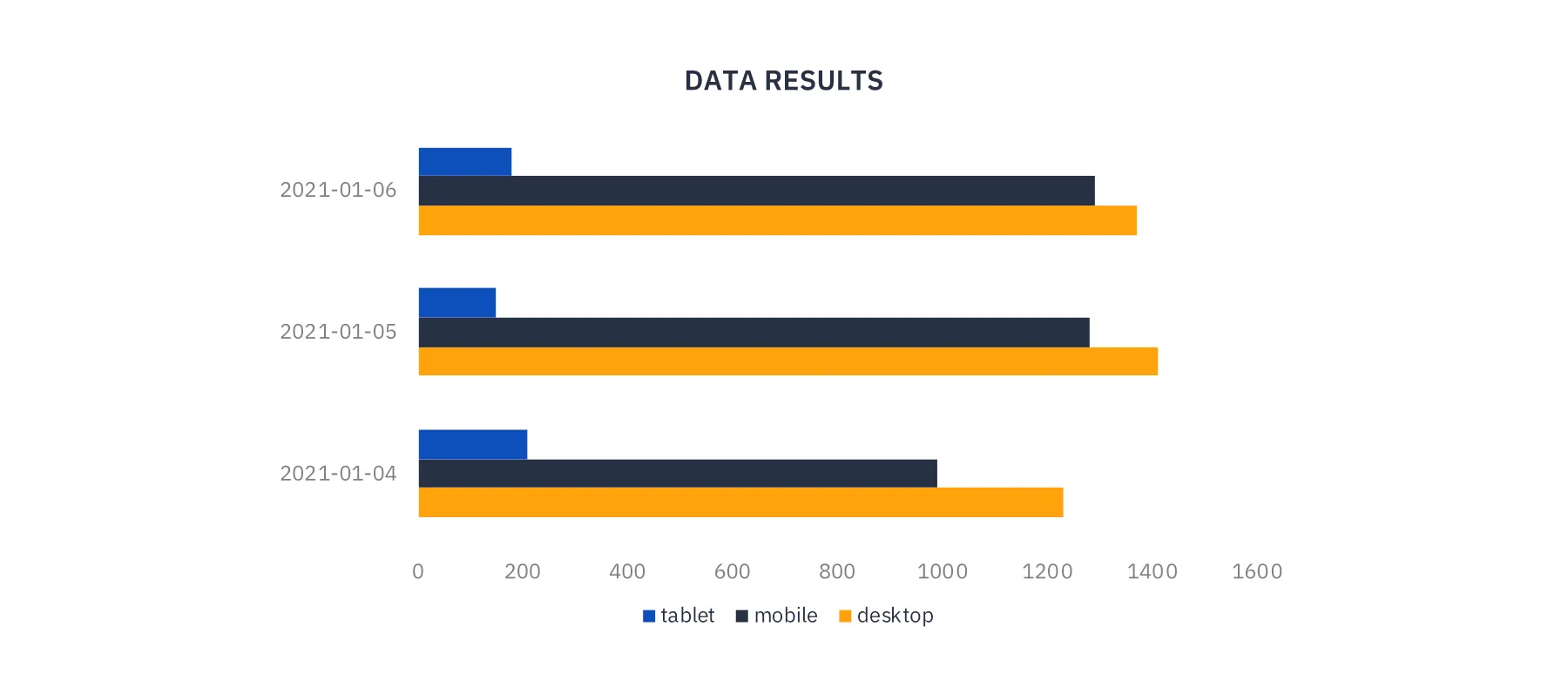

Querying data as a pivot table is useful for dividing resulting values by further characteristics to view them from a different angle. For example, you can observe a number of errors per day using a simple query, but a pivot table allows you to extend the view by the browser they occurred in.

The structure is different from the structure in the original API, both for the queries and for the results, because the approach to pivot queries has changed. In the original API, you extend a simple query by a pivot table so that in the results, you can see both the simple query results and for each row of the results you can match the corresponding row of the pivot table. This is useful as it gives you aggregate results for each row of the pivot table. However, it also complicates the structure and the extraction of the data from the results.

In the GA4 API you can only extract the pivot table by itself (not in combination with the un-pivoted table as in the original API). The results that you receive are not in the structure of a pivot table, in fact, the results’ rows have the same content as if you did not query a pivot table. However, the key addition is the pivotHeader which allows the data to be transformed into the required pivot table. It uses the fact that data can be easily transformed between the classical and pivot formats, you just need to know the list of all values or combinations of values to construct the columns and then you can fill in the values. The shape of the pivot table is determined by the way you distribute the dimensions’ names into the pivots parameter array. The pivotHeader gives you the combination of values for those dimensions that you write together within fieldNames parameter of one item of the pivots array and it gives you a list of values for single dimensions inside one item of the pivots array. At the moment, it is up to you to construct the pivot table from these indicators.

{

"dimensions": [

{

"name": "date"

},

{

"name": "deviceCategory"

}

],

"metrics": [

{

"name": "sessions"

}

],

"dateRanges": [

{

"startDate": "2021-01-04",

"endDate": "2021-01-06"

}

],

"pivots": [

{

"fieldNames": [

"deviceCategory"

]

},

{

"fieldNames": [

"date"

]

}

]

}

| date | desktop | mobile | tablet |

|---|---|---|---|

| 2021-01-04 | 1230 | 990 | 210 |

| 2021-01-05 | 1410 | 1280 | 150 |

| 2021-01-06 | 1370 | 1290 | 180 |

Python example

Until now we have talked about the JSON-structured queries used in the request body of cURL, HTTP, or JavaScript requests. However, it is possible to run the requests in Python as well, using Google Python library called google-analytics-data. The run of the Python code requires receiving a path to an access token for the service account and GA4 property ID to which this service account has access.

We include a sample code along with information on how to prepare your environment. To run the Python code, you need to first set up a virtual environment, then prepare a file with a path to an access token for the service account, and then you can run the Python code which will ask you to input the property_id as an argument. For the setup on Windows, run the code below in Command Prompt. Replace the place holder <your-env> with the selected name for your environment.

pip install virtualenv

virtualenv <your-env>

<your-env>\Scripts\activate

<your-env>\Scripts\pip.exe install google-analytics-data pandas python-dotenv

To prepare the access to data, you need to have a service account that has access to the GA4 property data. The local path needs to be saved under the parameter SERVICE_TOKEN_PATH in .env file which is located in the same folder as the Python code. The format of the file should be as follows:

SERVICE_TOKEN_PATH="C:/Users/YourUser/Documents/service_account_token.json"

If you need any help creating the service token, refer to official documents.

When you have set up the virtual environment, prepare the .env file and set the GA4 property ID into the parameter property_id, you can run the Python code to obtain the sample results. The code loads and prints data about active users and a number of sessions by the date, country, and city characteristics, since the beginning of the year 2021. For more information about the library, you can check the client library source code.

from dotenv import load_dotenv

import os

import json

import pandas as pd

from google.analytics.data_v1beta import BetaAnalyticsDataClient

from google.analytics.data_v1beta.types import DateRange, Dimension, Metric, RunReportRequest

# Setting

#(.env file is located in the same location and contains SERVICE_TOKEN_PATH=[local-path-to-Google-service-token-with-data-access])

load_dotenv()

SERVICE_TOKEN_PATH = os.getenv('SERVICE_TOKEN_PATH')

property_id = '<set-your-property-ID-here>'

def sample_run_report(property_id):

"""Runs a simple report on a Google Analytics 4 property."""

client = AlphaAnalyticsDataClient.from_service_account_file(SERVICE_TOKEN_PATH)

request = RunReportRequest(property=f"properties/{property_id}",

dimensions=[Dimension(name='date'), Dimension(name='country'), Dimension(name='city')],

metrics=[Metric(name='activeUsers'), Metric(name='sessions')],

date_ranges=[DateRange(start_date='2021-01-01', end_date='yesterday')])

response = client.run_report(request)

return response

def sample_extract_data(response):

"""Extracts data from GA 4 Data API response as Pandas Dataframe """

data_dict = {}

for row in response.rows:

data_dict_row = []

for i in range(len(row.dimension_values)):

data_dict_row.append(row.dimension_values[i].value)

for j in range(len(row.metric_values)):

data_dict_row.append(row.metric_values[j].value)

data_dict[response.rows.index(row)] = data_dict_row

columns_list = []

for dim_header in response.dimension_headers:

columns_list.append(dim_header.name)

for met_header in response.metric_headers:

columns_list.append(met_header.name)

return pd.DataFrame.from_dict(data_dict, orient='index', columns = columns_list)

if __name__ == "__main__":

query_response = sample_run_report(property_id)

sample_extract_data(query_response)

Summary

The newly published Google Analytics Data API is constructed to query data from Google Analytics 4. In this article, we summarized its similarities and differences to the GA Reporting API v4 for Universal Analytics. The basic parameters and results’ structure are very similar between these approaches but there are some new features introduced (like Quotas report) and there are several steps towards consistency between dimensions and metrics and between different types of queries. Thus, the GA Data API does not seem to be very difficult to transition to and at the same time, it brings the unique experience of working with the new Google Analytics.